This is a compilation of my notes and insights from my participation in week 8 of the Machine Learning ZoomCamp

What is Deep Learning? Link to heading

One of my goals in starting the Machine Learning ZoomCamp was to deepen my understanding of the fundamentals driving recent advances in Machine Learning and Generative AI. I was particularly excited to begin Week 8, which delved into TensorFlow and Keras, two pivotal libraries in deep learning research and application.

At its heart, deep learning comprises techniques for constructing sophisticated neural networks, which are biologically-inspired machine learning models. These models, developed using deep learning techniques, consist of multiple “layers” that process an input by transmitting it through each layer to discern patterns or features.

Building a Simple Neural Network Using Deep Learning Link to heading

For this weeks project, I constructed a basic convolutional neural network (CNN) using Keras. The task was to determine whether an image depicted a bee or a wasp, utilizing this dataset from Kaggle. Remarkably, the model required only a few lines of code to process the 150x150 RGB images in the training set. It included a 2D convolutional layer generating 32 filters of 3x3 pixels, a pooling layer to downsample the output, and then 64-neuron and 1 neuron dense layers, culminating in a binary classification: bee (0) or wasp (1).

During training, each of the 3x3 filters are convolved (slid over) the training images and a score is produced on the strength of the match. Each epoch (run) of the training adjusts the weights with the goal of trying to identify which patterns occur most frequently in a bee and a wasp for use in the final model.

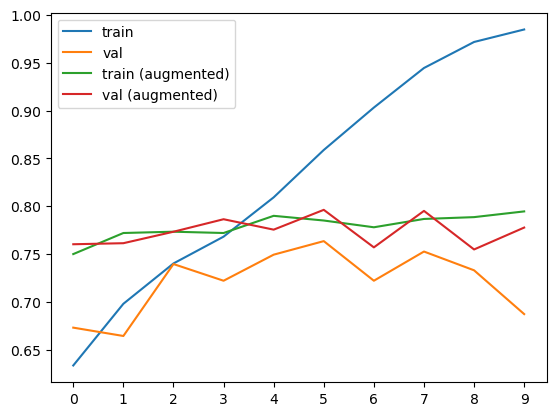

Training it just on the dataset itself wasn’t enough though, and the model over-fit to the training data pretty quickly. Applying a set of recommended transformations and augmentations to the training set helped to stabilize the model as can be seen in the below graph showing the accuracy score through multiple epochs of training.

Comparing accuracy before and after augmentation

While the final accuracy peaked at 79% on the test set, which isn’t great, the minimal effort and computational resources required to build this model were impressive.

Additional Learning Link to heading

I must highlight Jeremy Howard’s excellent deep learning course at fast.ai. I intend to dive further into this resource and the accompanying book after I complete the ZoomCamp course.

The Keras documentation was also notably well-organized and user-friendly. It offers a wealth of information for those seeking to understand the forefront of deep learning, including API documentation, guides, and comprehensive examples.

Stanford’s CS231n is another valuable resource, frequently cited in the ZoomCamp videos. I look forward to exploring it more in the coming weeks.

Finally, tools like the Tensor Flow Playground and Google’s Teachable Machine are excellent for experimenting with deep learning concepts. They have greatly aided in enhancing my understanding of the field.

11/9/2023 added additional detail to explain the layers in the homework project